Bangladesh to File U.S. Suit Over Central Bank Heist

8.2.2018 securityweek Cyber

Bangladesh's central bank will file a lawsuit in New York against a Philippine bank over the world's largest cyber heist, the finance minister said Wednesday.

Unidentified hackers stole $81 million in February 2016 from the Bangladesh central bank's account with the US Federal Reserve in New York.

The money was transferred to a Manila branch of the Rizal Commercial Banking Corp (RCBC), then quickly withdrawn and laundered through local casinos.

With only a small amount of the stolen money recovered and frustration growing in Dhaka, Bangladesh's Finance Minister A.M.A Muhith said last year he wanted to "wipe out" RCBC.

On Wednesday he said Bangladesh Bank lawyers were discussing the case in New York and may file a joint lawsuit against the RCBC with the US Federal Reserve.

"It will be (filed) in New York. Fed may be a party," he told reporters in Dhaka.

The deputy central bank governor Razee Hassan told AFP the case would be filed in April.

"They (RCBC) are the main accused," he said.

"Rizal Commercial Banking Corporation (RCBC) and its various officials are involved in money heist from Bangladesh Bank's reserve account and the bank is liable in this regard," Hassan said in a written statement.

The Philippines in 2016 imposed a record $21 million fine on RCBC after investigating its role in the audacious cyber heist.

Philippine authorities have also filed money-laundering charges against the RCBC branch manager.

The bank has rejected the allegations and last year accused Bangladesh's central bank of a "massive cover-up".

The hackers bombarded the US Federal Reserve with dozens of transfer requests, attempting to steal a further $850 million.

But the bank's security systems and typing errors in some requests prevented the full theft.

The hack took place on a Friday, when Bangladesh Bank is closed. The Federal Reserve Bank in New York is closed on Saturday and Sunday, slowing the response.

The US reserve bank, which manages the Bangladesh Bank reserve account, has denied its own systems were breached.

Cryptocurrency Mining Malware Hits Monitoring Systems at European Water Utility

8.2.2018 securityweek CoinMine

Malware Chewed Up CPU of HMI at Wastewater Facility

Cryptocurrency mining malware worked its way onto four servers connected to an operational technology (OT) network at a wastewater facility in Europe, industrial cybersecurity firm Radiflow told SecurityWeek Wednesday.

Radiflow says the incident is the first documented cryptocurrency malware attack to hit an OT network of a critical infrastructure operator.

The servers were running Windows XP and CIMPLICITY SCADA software from GE Digital.

“In this case the [infected] server was a Human Machine Interface (HMI),” Yehonatan Kfir, CTO at Radiflow, told SecurityWeek. “The main problem,” Kfir continued “is that this kind of malware in an OT network slows down the HMIs. Those servers are responsible for monitoring physical processes.”

Radiflow wasn’t able to name the exact family of malware it found, but said the threat was designed to mine Monero cryptocurrency and was discovered as part of routine monitoring of the OT network of the water utility customer.

“A cryptocurrency malware attack increases device CPU and network bandwidth consumption, causing the response times of tools used to monitor physical changes on an OT network, such as HMI and SCADA servers, to be severely impaired,” the company explained. “This, in turn, reduces the control a critical infrastructure operator has over its operations and slows down its response times to operational problems.”

While the investigation is still underway, Radiflow’s team has determined that the cryptocurrency malware was designed to run in a stealth mode on a computer or device, and even disable its security tools in order to operate undetected and maximize its mining processes for as long as possible.

“Cryptocurrency malware attacks involve extremely high CPU processing and network bandwidth consumption, which can threaten the stability and availability of the physical process of a critical infrastructure operator,” Kfir said. “While it is known that ransomware attacks have been launched on OT networks, this new case of a cryptocurrency malware attack on an OT network poses new threats as it runs in stealth mode and can remain undetected over time.”

“PCs in an OT network run sensitive HMI and SCADA applications that cannot get the latest Windows, antivirus and other important updates, and will always be vulnerable to malware attacks,” Kfir said.

While the malware was able to infect an HMI machine at a critical infrastructure operator, the attack was likely not specifically targeted at the water utility.

Thousands of industrial facilities have their systems infected with common malware every year, and the number of attacks targeting ICS is higher than it appears, according to a 2017 report by industrial cybersecurity firm Dragos.

Existing public information on ICS attacks shows numbers that are either very high (e.g. over 500,000 attacks according to unspecified reports cited by Dragos), or very low (e.g. roughly 290 incidents per year reported by ICS-CERT). It its report, Dragos set out to provide more realistic numbers on malware infections in ICS, based on information available from public sources such as VirusTotal, Google and DNS data.

As part of a project it calls MIMICS (malware in modern ICS), Dragos was able to identify roughly 30,000 samples of malicious ICS files and installers dating back to 2003. Non-targeted infections involving viruses such as Sivis, Ramnit and Virut are the most common, followed by Trojans that can provide threat actors access to Internet-facing environments.

These incidents may not be as severe as targeted attacks and they are unlikely to cause physical damage or pose a safety risk. However, they can cause liability issues and downtime to operations, which leads to increased financial costs, Robert M. Lee, CEO and founder of Dragos, told SecurityWeek in March 2017.

One example is the incident involving a German nuclear energy plant in Gundremmingen, whose systems got infected with Conficker and Ramnit malware. The malware did not cause any damage and it was likely picked up by accident, but the incident did trigger a shutdown of the plant as a precaution.

Stealthy Data Exfiltration Possible via Magnetic Fields

8.2.2018 securityweek Virus

Researchers have demonstrated that a piece of malware present on an isolated computer can use magnetic fields to exfiltrate sensitive data, even if the targeted device is inside a Faraday cage.

A team of researchers at the Ben-Gurion University of the Negev in Israel have created two types of proof-of-concept (PoC) malware that use magnetic fields generated by a device’s CPU to stealthily transmit data.

A magnetic field is a force field created by moving electric charges (e.g. electric current flowing through a wire) and magnetic dipoles, and it exerts a force on other nearby moving charges and magnetic dipoles. The properties of a magnetic field are direction and strength.

The CPUs present in modern computers generate low frequency magnetic signals which, according to researchers, can be manipulated to transmit data over an air gap.

The attacker first needs to somehow plant a piece of malware on the air-gapped device from which they want to steal data. The Stuxnet attack and other incidents have shown that this task can be accomplished by a motivated attacker.

Once the malware is in place, it can collect small pieces of information, such as keystrokes, passwords and encryption keys, and send it to a nearby receiver.

The malware can manipulate the magnetic fields generated by the CPU by regulating its workload – for example, overloading the processor with calculations increases power consumption and generates a stronger magnetic field.

The collected data can be modulated using one of two schemes proposed by the researchers. Using on-off keying (OOK) modulation, an attacker can transmit “0” or “1” bits through the signal generated by the magnetic field – the presence of a signal represents a “1” bit and its absence a “0” bit.

Since the frequency of the signal can also be manipulated, the malware can use a specific frequency to transmit “1” bits and a different frequency to transmit “0” bits. This is known as binary frequency-shift keying (FSK) modulation.

Ben Gurion University researchers have developed two pieces of malware that rely on magnetic fields to exfiltrate data from an air-gapped device. One of them is called ODINI and it uses this method to transmit the data to a nearby magnetic sensor. The second piece of malware is named MAGNETO and it sends data to a smartphone, which typically have magnetometers for determining the device’s orientation.

In the case of ODINI, experts managed to achieve a maximum transfer rate of 40 bits/sec over a distance of 100 to 150 cm (3-5 feet). MAGNETO is less efficient, with a rate of only 0.2 - 5 bits/sec over a distance of up to 12.5 cm (5 inches). Since transmitting one character requires 8 bits, these methods can be efficient for stealing small pieces of sensitive information, such as passwords.

Researchers demonstrated that ODINI and MAGNETO also work if the targeted air-gapped device is inside a Faraday cage, an enclosure used to block electromagnetic fields, including Wi-Fi, Bluetooth, cellular and other wireless communications.

In the case of MAGNETO, the malware was able to transmit data even if the smartphone was placed inside a Faraday bag or if the phone was set to airplane mode.

Ben-Gurion researchers have found several ways of exfiltrating data from air-gapped networks, including through infrared cameras, router LEDs, scanners, HDD activity LEDs, USB devices, the noise emitted by hard drives and fans, and heat emissions.

Meet PinME, A Brand New Attack To Track Smartphones With GPS Turned Off.

8.2.2018 securityaffairs Attack

Researchers from Princeton University have developed an app called PinME to locate and track smartphone without using GPS.

The research team led by Prateek Mittal, assistant professor in Princeton’s Department of Electrical Engineering and PinMe paper co-author developed the PinMe application that mines information stored on smartphones that don’t require permissions for access.

The data is processed alongside with public available maps and weather reports resulting on information if a person is traveling by foot, car, train or airplane and their travel route. The applications for intelligence and law enforcement agencies to solve crimes like kidnapping, missing people and terrorism are very significant.

As the researchers notice, the application utilizes a series of algorithms to locate and track someone using information like the phone IP address and time zone combined with data from its sensors. The phone sensors collect compass details from the gyroscope, air pressure reading from barometer and accelerometer data while remaining undetected from the user. The resulting data processed can be used to extract contextual information about users’ habits, regular activities, and even relationships.

This technology as many others have two sides: Help solving crimes at large, and implications on privacy and security of the users. The researchers hope to be fomenting the development of security measures to switch off sensor data by revealing this sensor security flaw. Nowadays such sensor data is collected by fitness and game applications to track people movement.

Another key point where the application can be a game changer is an alternative navigation tool, as highlighted by the researchers. Gps signals used in autonomous cars and ships can be the target of hackers putting the safety of the passengers in danger. The researchers conducted their experiment using Galaxy S4 i9500, iPhone 6 and iPhone 6S. To determine the last Wi-Fi connection, the PinMe application read the latest IP address used and the network status.

To determine how a user is traveling, the application utilizes a machine learning algorithm that recognizes the different patterns of walking, driving and flying by gathering data from the phones sensor like speed, direction of travel, delay between movement and altitude.

Once determined the pattern of activity of a user, the application then executes one of four additional algorithms to determine the type transportation. By comparing the phone data against public information the route of the user is determined. Maps from Google and the U.S. Geological Survey were used to determine the altitude details of every point on Earth. Details regarding temperature, humidity, and air pressure reports were also used to determine the use of trains or planes.

The researchers wanted also to raise the question about privacy and data collected without the user consent as Prateek Mittal states: “PinMe demonstrates how information from seemingly innocuous sensors can be exploited using machine-learning techniques to infer sensitive details about our lives”.

Sources:

https://gizmodo.com/how-to-track-a-cellphone-without-gps-or-consent-1821125371

https://nakedsecurity.sophos.com/2017/12/19/gps-is-off-so-you-cant-be-tracked-right-wrong/

https://www.princeton.edu/news/2017/11/29/phones-vulnerable-location-tracking-even-when-gps-services

https://www.theregister.co.uk/2018/02/07/boffins_crack_location_tracking_even_if_youve_turned_off_the_gps/

https://www.helpnetsecurity.com/2018/02/07/location-tracking-no-gps/

https://www.bleepingcomputer.com/news/security/apps-can-track-users-even-when-gps-is-turned-off/

https://arxiv.org/pdf/1802.01468.pdf

http://ieeexplore.ieee.org/document/8038870/?reload=true

For the second time CISCO issues security patch to fix a critical vulnerability in CISCO ASA

8.2.2018 securityaffairs Vulnerebility

Cisco has rolled out new security patches for a critical vulnerability, tracked as CVE-2018-0101, in its CISCO ASA (Adaptive Security Appliance) software.

At the end of January, the company released security updates the same flaw in Cisco ASA software. The vulnerability could be exploited by a remote and unauthenticated attacker to execute arbitrary code or trigger a denial-of-service (DoS) condition causing the reload of the system.

The vulnerability resides in the Secure Sockets Layer (SSL) VPN feature implemented by CISCO ASA software, it was discovered by the researcher Cedric Halbronn from NCC Group.

The flaw received a Common Vulnerability Scoring System base score of 10.0.

According to CISCO, it is related to the attempt to double free a memory region when the “webvpn” feature is enabled on a device. An attacker can exploit the vulnerability by sending specially crafted XML packets to a webvpn-configured interface.

Further investigation of the flaw revealed additional attack vectors, for this reason, the company released a new update. The researchers also found a denial of service issue affecting Cisco ASA platforms.

“After broadening the investigation, Cisco engineers found other attack vectors and features that are affected by this vulnerability that were not originally identified by the NCC Group and subsequently updated the security advisory,” reads a blog post published by Cisco.

The experts noticed that the flaw ties with the XML parser in the CISCO ASA software, an attacker can trigger the vulnerability by sending a specifically crafted XML file to a vulnerable interface.

The list of affected CISCO ASA products include:

3000 Series Industrial Security Appliance (ISA)

ASA 5500 Series Adaptive Security Appliances

ASA 5500-X Series Next-Generation Firewalls

ASA Services Module for Cisco Catalyst 6500 Series Switches and Cisco 7600 Series Routers

ASA 1000V Cloud Firewall

Adaptive Security Virtual Appliance (ASAv)

Firepower 2100 Series Security Appliance

Firepower 4110 Security Appliance

Firepower 9300 ASA Security Module

Firepower Threat Defense Software (FTD)

According to Cisco experts, there is no news about the exploitation of the vulnerability in the wild, anyway, it is important to apply the security updates immediately.

Automation Software Flaws Expose Gas Stations to Hacker Attacks

7.2.2018 securityweek CyberCrime

Gas stations worldwide are exposed to remote hacker attacks due to several vulnerabilities affecting the automation software they use, researchers at Kaspersky Lab reported on Wednesday.

The vulnerable product is SiteOmat from Orpak, which is advertised by the vendor as the “heart of the fuel station.” The software, designed to run on embedded Linux machines or a standard PC, provides “complete and secure site automation, managing the dispensers, payment terminals, forecourt devices and fuel tanks to fully control and record any transaction.”

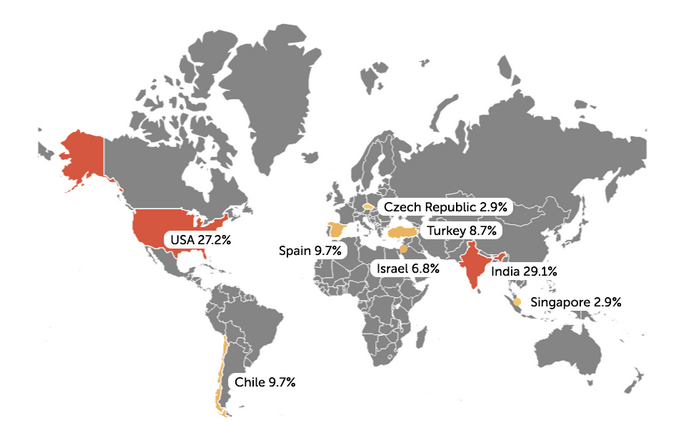

Kaspersky researchers discovered that the “secure” part is not exactly true and more than 1,000 of the gas stations using the product allow remote access from the Internet. Over half of the exposed stations are located in the United States and India.

“Before the research, we honestly believed that all fueling systems, without exception, would be isolated from the internet and properly monitored. But we were wrong,” explained Kaspersky’s Ido Naor. “With our experienced eyes, we came to realize that even the least skilled attacker could use this product to take over a fueling system from anywhere in the world.”

According to the security firm, the vulnerabilities affecting SiteOmat could be exploited by malicious actors for a wide range of purposes, including to modify fuel prices, shut down fueling systems, or cause a fuel leakage.

The security holes can also allow hackers to move laterally within the targeted company’s network, gain access to payment systems and steal financial data, and obtain information on the station’s customers (e.g. license plates, driver identity data). Another possible scenario described by Kaspersky involves disrupting the station’s operations and demanding a ransom.

These attacks are possible due to a series of vulnerabilities, including hardcoded credentials (CVE-2017-14728), persistent XSS (CVE-2017-14850), SQL injection (CVE-2017-14851), insecure communications (CVE-2017-14852), code injection (CVE-2017-14853), and remote code execution (CVE-2017-14854). Exploiting the flaws does not require advanced hacking skills, Naor said.

The fact that the vendor has made available technical information about the device and a detailed user manual made it easier for experts to find the security holes.

The systems analyzed by Kaspersky were often embedded in fueling systems and researchers believe they had been connected to the Internet for more than a decade.

Orpak was informed about the flaws in September and the company told researchers a month later that it had been in the process of rolling out a hardened version of its system, but it has since not shared any updates on the status of patches. SecurityWeek has reached out to the vendor for comment and will update this article if the company responds.

Hackers From Florida, Canada Behind 2016 Uber Breach

7.2.2018 securityweek Hacking

Uber shares more details about 2016 data breach

Two individuals living in Canada and Florida were responsible for the massive data breach suffered by Uber in 2016, the ride-sharing company’s chief information security officer said on Tuesday.

In a hearing before the Senate Subcommittee on Consumer Protection, Product Safety, Insurance, and Data Security, Uber CISO John Flynn shared additional details on the data breach that the company covered up for more than a year.

The details of 57 million Uber riders and drivers were taken from the company’s systems between mid-October and mid-November 2016. The compromised data included names, email addresses, phone numbers, user IDs, password hashes, and the driver’s license numbers of roughly 600,000 drivers. The incident was only disclosed by Uber’s CEO, Dara Khosrowshahi, on November 21, 2017.

Flynn told the Senate committee on Tuesday that the data accessed by the hackers had been stored in an Amazon Web Services (AWS) S3 bucket used for backup purposes. The attackers had gained access to it with credentials they had found in a GitHub repository used by Uber engineers. Uber decided to stop using GitHub for anything other than open source code following the incident.

Uber’s security team was contacted on November 14, 2016, by an anonymous individual claiming to have accessed Uber data and demanding a six-figure payment. After confirming that the data obtained by the hackers was valid, the company decided to pay the attackers $100,000 through its HackerOne-based bug bounty program to have them destroy the data they had obtained.

While some members of Uber’s security team were working on containing the incident and finding the point of entry, others were trying to identify the attackers. The man who initially contacted Uber was from Canada and his partner, who actually obtained the data, was located in Florida, the Uber executive said.

“Our primary goal in paying the intruders was to protect our consumers’ data,” Flynn said in a prepared statement. “This was not done in a way that is consistent with the way our bounty program normally operates, however. In my view, the key distinction regarding this incident is that the intruders not only found a weakness, they also exploited the vulnerability in a malicious fashion to access and download data.”

A code of conduct added by HackerOne to its disclosure guidelines last month includes an entry on extortion and blackmail, prohibiting “any attempt to obtain bounties, money or services by coercion.” It’s unclear if this is in response to the Uber incident, but the timing suggests that it may be.

The Uber CISO has not said if any actions have been taken against the hackers, but Reuters reported in December that the Florida resident was a 20-year-old who was living with his mother in a small home, trying to help pay the bills. The news agency learned from sources that Uber had decided not to press charges as the individual did not appear to pose a further threat.

Flynn admitted that “it was wrong not to disclose the breach earlier,” and said the ride-sharing giant has taken steps to ensure that such incidents are avoided in the future. He also admitted that the company should not have used its bug bounty program to deal with extortionists.

Uber’s chief security officer, Joe Sullivan, and in-house lawyer Craig Clark were fired over their roles in the breach. Class action lawsuits have been filed against the company over the incident and some U.S. states have announced launching investigations into the cover-up.

U.S. officials are not happy with the way Uber has handled the situation.

“The fact that the company took approximately a year to notify impacted users raises red flags within this Committee as to what systemic issues prevented such time-sensitive information from being made available to those left vulnerable,” said Sen. Jerry Moran, chairman of the congressional committee.

Just before the Senate hearing, Congresswoman Jan Schakowsky and Congressman Ben Ray Lujan highlighted that Uber had deceived the Federal Trade Commission (FTC) by failing to mention the 2016 breach while the agency had been investigating another, smaller cybersecurity incident suffered by the firm in 2014.

XSS, SQL Injection Flaws Patched in Joomla

7.2.2018 securityweek Vulnerebility

One SQL injection and three cross-site scripting (XSS) vulnerabilities have been patched with the release of Joomla 3.8.4 last week. The latest version of the open-source content management system (CMS) also includes more than 100 bug fixes and improvements.

The XSS and SQL injection vulnerabilities affect the Joomla core, but none of them appear to be particularly dangerous – they have all been classified by Joomla developers as “low priority.”

The XSS flaws affect the Uri class (versions 1.5.0 through 3.8.3), the com_fields component (versions 3.7.0 through 3.8.3), and the Module chrome (versions 3.0.0 through 3.8.3).

The SQL injection vulnerability is considered more serious – Joomla developers have classified it as low severity, but high impact.

The security hole, tracked as CVE-2018-6376, affects versions 3.7.0 through 3.8.3. The issue was reported to Joomla by RIPS Technologies on January 17 and a patch was proposed by the CMS’s developers the same day.

In a blog post published on Tuesday, RIPS revealed that the vulnerability found by its static code analyzer is a SQL injection that can be exploited by an authenticated attacker with low privileges (i.e. Manager account) to obtain full administrator permissions.

“An attacker exploiting this vulnerability can read arbitrary data from the database. This data can be used to further extend the permissions of the attacker. By gaining full administrative privileges she can take over the Joomla! installation by executing arbitrary PHP code,” said RIPS researcher Karim El Ouerghemmi.

The researcher explained that this is a two-phase attack. First, the attacker injects arbitrary content into the targeted site’s database, and then they create a special SQL query that leverages the previously injected payload to obtain information that can be used to gain admin privileges.

This is not the first time RIPS has found a vulnerability in Joomla. In September, the company reported identifying a flaw that could have been exploited by an attacker to obtain an administrator’s username and password by guessing the credentials character by character.

Questionable Interpretation of Cybersecurity's Hidden Labor Cost

7.2.2018 securityweek Cyber

Report Claims a 2,000 Employee Organization Spends $16 Million Annually on Incident Triaging

The de facto standard for cybersecurity has always been detect and respond: detect a threat and respond to it, either by blocking its entry or clearing its presence. A huge security industry has evolved over the last two decades based on this model; and most businesses have invested vast sums in implementing the approach. It can be described as 'detect-to-protect'.

In recent years a completely different isolation cyber security paradigm has emerged. Rather than detect threats, simply isolate applications from them. This is achieved by running the app in a safe container where malware can do no harm. If an application is infected, the container and the malware is abandoned, and a clean version of the application is loaded into the container. There is no need to spend time and money on threat detection since it can do no harm. This is the isolation model.

The difficulty for vendors of isolation technology is that potential customers are already heavily invested in the detect paradigm. Getting them to switch to isolation is tantamount to asking them to abandon their existing investment as a waste of money.

Bromium, one of the earliest and leading isolation companies, has chosen to demonstrate the unnecessary continuing manpower cost of operating a detect-to-protect model, together with the unnecessary cybersecurity technology that supports it.

Bromium commissioned independent market research firm Vanson Bourne to survey 500 CISOs (200 in the U.S.; 200 in the UK; and 100 Germany) in order to understand and demonstrate the operational cost of detect-to-protect. All the surveyed CISOs are employed by firms with between 1000 and 5000 employees, allowing the research to quote figures based on an average organization of 2000 employees.

The bottom-line of this research (PDF) is that a company with 2,000 employees spends $16.7 million dollars every year on protect-to-detect. No comparable figure is given for an isolation model, but the reader is allowed to assume it would be considerably less.

The total cost is achieved by combining threat triaging costs, computer rebuilds, and emergency patching costs to provide the overall labor cost, plus the technology cost of nearly $350,000. The implication is that it is not so worrying to abandon $350,000 for a saving of $16 million -- and indeed, that would be true if the manpower costs are valid. But they are questionable.

All costs in the report are based on figures returned by the survey respondents. For example, according to the report, "Our research showed that enterprises issue emergency patches five times per month on average, with each fix taking 13 hours to deploy. That’s 780 hours a year, which—multiplied by the $39.24 average hourly rate for a cybersecurity professional—incurs costs of $30,607 per year."

But since these are emergency patches, we can add an additional $19,900 in overtime and/or contractor costs: a total of $49,900 every year that could be all but eliminated by switching to an isolation model.

The cost of computer rebuilds comes from the cost of rebuilding compromised computers that detect-to-protect has failed to protect. "On average," says the report, "organizations rebuild 51 devices every month, with each taking four hours to rebuild—equating to 2,448 hours each year. When multiplied by the average hourly wage of a cybersecurity professional, $39.24, that’s an average cost of $96,059 per year."

All these costs would seem to be realistic for a detect-to-protect model. The implication is that a switch to the isolate model would save nearly $500,000 per year to offset the cost of isolation. But the report goes much further, and suggests that much of a colossal $16 million can also be saved every year by an organization with 2,000 employees that will no longer require incident triaging by the security team.

How? "Well," claims the report, "on average SOC teams triage 796 alerts per week, taking an average of 10 hours per alert—that’s 413,920 hours across the year. When you consider that the average hourly rate for a cybersecurity professional is $39.24, that’s an annual average cost of more than $16 million each year."

The math works. But an alternative way of looking at these figures is that 7,960 hours of triaging would take more than 47 employees doing nothing but triaging 24 hours a day, seven days a week. Frankly, I doubt if any company with 2,000 employees does anything near this amount of triaging. It is, I suggest, misleading to state bluntly (as the report does): "Organizations spend $16 million per year triaging alerts."

“Application isolation provides the last line of defense in the new security stack and is the only way to tame the spiraling labor costs that result from detection-based solutions,” says Gregory Webb, CEO at Bromium. “Application isolation allows malware to fully execute, because the application is hardware isolated, so the threat has nowhere to go and nothing to steal. This eliminates reimaging and rebuilds, as machines do not get owned. It also significantly reduces false positives, as SOC teams are only alerted to real threats. Emergency patching is not needed, as the applications are already protected in an isolated container. Triage time is drastically reduced because SOC teams can analyze the full kill chain.”

All of this is perfectly valid -- except for the $16 million annual detect-to-protect triaging claim. SecurityWeek has invited Bromium to comment on our concerns, and will update this article with any response.

Capable Luminosity RAT Apparently Killed in 2017

7.2.2018 securityweek Virus

The prevalence of the Luminosity remote access Trojan (RAT) is fading away after the malware was supposedly killed half a year ago, Palo Alto Networks says.

First seen in April 2015, Luminosity, also known as LuminosityLink, has seen broad use among cybercriminals, mainly due to its low price and long list of capabilities. Last year, Nigerian hackers used the RAT in attacks aimed at industrial firms.

Luminosity’s author might have claimed that the RAT was a legitimate tool, but its features told a different story: surveillance (remote desktop, webcam, and microphone), smart keylogger (record keystrokes, target specific programs, keylogger viewer), crypto-currency miner, distributed denial of service (DDoS) module.

Earlier this week, Europol’s European Cybercrime Centre (EC3) and the UK’s National Crime Agency (NCA) announced a law enforcement operation targeting sellers and users of the Luminosity Trojan, but Palo Alto says the threat appears to have died about half a year ago, long before this announcement.

The luminosity[.]link and luminosityvpn[.]com, domains associated with the malware, have been taken down as well. In fact, the sales of the RAT through luminosity[.]link ceased in July 2017, and customers started complaining about their licenses no longer working.

With Luminosity’s author, who goes by the online handle of KFC Watermelon, keeping a low profile and closing down sales, and with Nanocore RAT author arrested earlier, speculation emerged on the developer being arrested as well. It was also suggested that he might have handed over his customer list.

To date, however, no report of an arrest in the case of the Luminosity author has emerged, and Europol’s announcement focuses on the RAT’s users, without mentioning the developer. According to Palo Alto, this author (who also built Plasma RAT) lives in Kentucky, which would also explain his online handle.

The security firm collected over 43,000 unique Luminosity samples during the two years when the threat was being sold, and says that thousands of customers submitted samples for analysis.

To verify the legitimate use of the RAT, the command and control servers had to contact a licensing server. In July 2017, researchers observed a sharp drop in sales, with the licensing server going down, despite some samples still being seen. Palo Alto believes the RAT’s prevalence was likely fueled by cracked versions, as development had already stopped.

“Based on our analysis and the recent Europol announcement, it does seem though that LuminosityLink is indeed dead, and we await news of what has indeed happened to the author of this malware. In support of this, we have seen LuminosityLink prevalence drop significantly and we believe any remaining observable instances are likely due to cracked versions,” Palo Alto notes.

The researchers also note that, although some of the Luminosity’s features might be put to legitimate use, the “preponderance of questionable or outright illegitimate features discredit any claims to legitimacy” that the RAT’s author might have.

The Argument Against a Mobile Device Backdoor for Government

7.2.2018 securityweek Mobil

Just as the Scope of 'Responsible Encryption' is Vague, So Too Are the Technical Requirements Necessary to Achieve It

The 'responsible encryption' demanded by law enforcement and some politicians will not prevent criminals 'going dark'; will weaken cyber security for innocent Americans; and will have a hit on the U.S. economy. At the same time, there are existing legal methods for law enforcement to gain access to devices without requiring new legislation.

These are the conclusions of Riana Pfefferkorn, cryptography fellow at the Center for Internet and Society at the Stanford Law School in a paper published Tuesday titled, The Risks of “Responsible Encryption” (PDF).

One of the difficulties in commenting on government proposals for responsible encryption is that there are no proposals -- merely demands that it be introduced. Pfefferkorn consequently first analyzes the various comments of two particularly vocal proponents: U.S. Deputy Attorney General, Rod Rosenstein, and the current director of the FBI, Christopher Wray to understand what they, and other proponents, might be seeking.

Wray seems to prefer a voluntary undertaking from the technology sector. Rosenstein is looking for a federal legislative approach. Rosenstein seems primarily concerned with mobile device encryption. Wray is also concerned with access to encrypted mobile devices (and possibly other devices), but sees responsible encryption also covering messaging apps (but perhaps not other forms of data in transit).

Just as the scope of 'responsible encryption' is vague, so too are the technical requirements necessary to achieve it.

"The only technical requirement that both officials clearly want," concludes Pfefferkorn, "is a key-escrow model for exceptional access, though they differ on the specifics. Rosenstein seems to prefer that the provider store its own keys; Wray appears to prefer third-party key escrow."

The basic argument is that golden keys to devices and/or messaging apps should be maintained somewhere that law enforcement can access with a court order: that is, some form of key escrow. This is a slightly lesser ambition than that sought by government in the mid-1990s in the discussions between government (then, as now, not just in the U.S.) and technologists during what became known as the First Crypto War. At that time, government sought much wider control over encryption, and access to everyone's computer at chip level. New America published a history (PDF) of that era in 2015.

Rosenstein has argued that device and application manufacturers already have and use a form of key escrow to manage and perform software updates. The argument is that if they can do this for themselves, they can do it for government to prevent criminal communications from 'going dark'. Pfefferkorn, however, offers four arguments against this.

First, the scale is completely different. The software update key is known and used by only a very small number of internal and highly trusted staff, and then used only infrequently. But, suggests Pfefferkorn, "with law enforcement agencies from around the globe sending in requests to the manufacturer or third-party escrow agent at all hours (and expecting prompt turn-around), the decryption key would likely be called into use several times a day, every day. This, in turn, means the holder of the key would have to provide enough staff to comply expeditiously with all those demands."

Increased use of the key increases the risk of loss through human error or malfeasance (such as extortion or bribery) -- and the loss of that key could be catastrophic.

Second, attackers will seek to exploit the process through social engineering with spear-phishing attacks against the vendor's or escrow agent's employees; and it is generally only a matter of time before spear-phishing succeeds. The likelihood of spear-phishing succeeding will increase with the sheer volume of LEA demands received. The FBI has claimed that it had around 7,800 seized phones it could not unlock in the last fiscal year. These alone, not including any phones seized by the thousands of State and local law enforcement offices, would average at more than 20 key requests every day, making a spear-phishing attempt less obvious.

Third, it would harm the U.S. economy both through loss of market share at home and abroad (since security could not be guaranteed), and through the economic effect of ID and IP theft following the likely abuse of the system.

Finally, Pfefferkorn argues that access to devices through key escrow still won't necessarily provide access to communications or content if these are separately encrypted by the user. "If the user chooses a reasonable password for the app," she says, "then unlocking the phone will not do any good... In short, an exceptional-access mandate for devices will never be completely effective."

Pfefferkorn goes further by suggesting that there are already numerous ways in which LEAs can obtain information from mobile devices. If the device is locked with a biometric identifier, the police can compel its owner to unlock it (not so with a password lock). If it is synced with other devices or backed up to the cloud, then access may be easier from these other destinations. Law enforcement already claims wide-ranging powers under the Stored Communications Act to access stored communications and transactional records held by ISPs -- as seen in the long-running battle between Microsoft and the government.

Metadata is another source of legal information. This can be gleaned from message headers, while cell towers can provide location and journey tracking. Far more metadata is likely to become available through the internet of things.

Finally, there are forensics and 'government hacking' opportunities. In early 2016 the FBI asked, and then got a court order, for Apple to provide access to the locked iPhone of Syed Rizwan Farook, known as the San Bernardino Shooter. Apple declined -- but either through contract hackers or a forensics company such as Cellebrite, the FBI eventually succeeded without help from Apple. "The success of tools such as Cellebrite’s in circumventing device encryption," says Pfefferkorn, "stands as a counterpoint to federal officials’ asserted need to require device vendors by law to weaken their own encryption."

Pfefferkorn's opinion in the ongoing argument for law enforcement to be granted an 'exceptional-access' mandate is clear: "It would be unwise."

Automated Hacking Tool Autosploit Cause Concerns Over Mass Exploitation

7.2.2018 securityaffairs Exploit

The Autosploit hacking tool was developed aiming to automate the compromising of remote hosts both by collecting automatically targets as well as by using Shodan.io API.

Users can define its platform search queries like Apache, IIS and so forth to gather targets to be attacked. After gathering the targets, the tool uses Metasploit modules of its exploit component to compromise the hosts.

The Metasploit modules to be used will depend on the comparison of the name of the module and the query search. The developer also added a type of attack where all modules can be used at once. As the author noticed, Metasploit modules were added with the intent of enabling Remote Code Execution as well as gaining Reverse TCP Shell or Meterpreter Sessions.

There are different opinions about the release of the tool by experts. As noticed by Bob Noel, Director of Strategic Relationships and Marketing at Plixer:

“AutoSploit doesn’t introduce anything new in terms of malicious code or attack vectors. What it does present is an opportunity for those who are less technically adept to use this tool to cause substantial damage. Once initiated by a person, the script automates and couples the process of finding vulnerable devices and attacking them. The compromised devices can be used to hack Internet entities, mine cryptocurrencies, or be recruited into a botnet for DDoS attacks. The release of tools like these exponentially expands the threat landscape by allowing a wider group of hackers to launch global attacks at will”.

On the other hand, Chris Roberts, chief security architect at Acalvio states:

” The kids are not more dangerous. They already were dangerous. We’ve simply given them a newer, simpler, shinier way to exploit everything that’s broken. Maybe we should fix the ROOT problem”.

The recent revelation that adult sex toys can be accessed remotely by hackers using Shodan is a scenario where the tool can represent a great and grave danger.

The risks and dangers looming around always existed. The release of the tool is not a new attack vector itself according to Gavin Millard, Technical Director at Tenable:

“Most organizations should have a process in place for measuring their cyber risk and identifying issues that could be easily leveraged by automated tools. For those that don’t, this would be an ideal time to understand where those exposures are and address them before a curious kid pops a web server and causes havoc with a couple of commands”.

A recommendation is given by Jason Garbis, VP at Cyxtera: ” In order to protect themselves, organizations need to get a clear, accurate, and up-to-date picture of every service they expose to the Internet. Security teams must combine internal tools with external systems like Shodan to ensure they’re aware of all their points of exposure”.

Sources:

https://www.scmagazine.com/autosploit-marries-shodan-metasploit-puts-iot-devices-at-risk/article/740912/

https://motherboard.vice.com/en_us/article/xw4emj/autosploit-automated-hacking-tool

https://arstechnica.com/information-technology/2018/02/threat-or-menace-autosploit-tool-sparks-fears-of-empowered-script-kiddies/

https://www.wired.com/story/autosploit-tool-makes-unskilled-hacking-easier-than-ever/

https://n0where.net/automated-mass-exploiter-autosploit

http://www.informationsecuritybuzz.com/expert-comments/autosploit/

https://securityledger.com/2018/02/episode-82-skinny-autosploit-iot-hacking-tool-get-ready-gdpr

https://www.kitploit.com/2018/02/autosploit-automated-mass-exploiter.html

https://www.darkreading.com/threat-intelligence/autosploit-mass-exploitation-just-got-a-lot-easier-/a/d-id/1330982

http://www.securityweek.com/autosploit-automated-hacking-tool-set-wreak-havoc-or-tempest-teapot

Hackers can remotely access adult sex toys compromising at least 50.000 users

7.2.2018 securityaffairs Hacking

Researchers discovered that sex toys from German company Amor Gummiwaren GmbH and its cloud platform are affected by critical security flaws.

As a result for Master Thesis by Werner Schober in cooperation with SEC Consult and the University of Applied Sciences St. Pölten, it was discovered that sex toys from German company Amor Gummiwaren GmbH and its cloud platform are affected by critical security flaws.

In an astonishing revelation, multiple vulnerabilities were discovered in “Vibratissimo” secy toys and in its cloud platform that compromised not only the privacy and data protection but also physical safety of owners.

The database pertaining all customers data was accessible via internet in such a way that explicit images, chat logs, sexual orientation, email addresses and passwords in clear text were compromised.

A total lack of security measures had caused the enumeration of all explicit images of users compromising their identities due to the utilization of predictable numbers and absence of authorization verification. Hackers could even give pleasure to users without their consent using the internet or standing nearby the address within the range of Bluetooth. These are only a few dangers users are exposed once connected to the world of the Internet of Things (IoT).

The Internet of Things (IoT) is a technology that comprises a myriad of devices connected to the internet and has evolved in such way that is present in many products used in a daily basis, from cars to home utilities. Once taking this into account we see the arising of a new sub-category within the Internet of Things (IoT) named Internet of Dildos (IoD). The Internet of Dildos (IoD) comprehends every device connected to networks that give mankind pleasure. According to the article, the term from 1975 given to this area of research is the following: “Teledildonics (also known as “cyberdildonics”) is technology for remote sex (or, at least, remote mutual masturbation), where tactile sensations are communicated over a data link between the participants”.

The products from Amor Gummiwaren GmbH that are vulnerable are the following: Vibratissimo Panty Buster, MagicMotion Flamingo, and Realov Lydia. The analysis of researchers focused on Vibratissimo Panty Buster. The panty buster is a sex toy that can be controlled remotely with mobile applications (Android, iOS), but the mobile application, the backend server, hardware, and firmware are developed by third-party company. The application presents many interactive features that enable extensive communication and sharing capabilities, in such a manner that creates a social network where users can expand their experience. Some features are: Search for other users, the creation of friends lists, video chat, message board and sharing of image galleries that can be stored across its social network.

The vulnerabilities found were: Customer Database Credential Disclosure, Exposed administrative interfaces on the internet, Cleartext Storage of Passwords, Unauthenticated Bluetooth LE Connections, Insufficient Authentication Mechanism, Insecure Direct Object Reference, Missing Authentication in Remote Control and Reflected Cross-Site Scripting. As we start taking a glimpse at the vulnerabilities discovered we can consider the following: All credentials of Vibratissimo database environment were leaked on the internet, alongside with the PHPMyAdmin interface that can have allowed hackers to access the database and dump all content.

The PHPMyAdmin interface was accessible throughout the URL http://www.vibratissimo.com/phpmyadmin/ with the stored passwords without encryption in clear text format. The content pertained to the database might have the following data: Usernames, Session Tokens, Cleartext passwords, chat histories and explicit image galleries created by the users themselves. The DS_STORE file and config.ini.php was found on the web server of Vibratissimo in such way that hackers could exploit attack vector like directory listing and discover the operating system which in this case is a MAC OSX.

Also, as disclosed by the researchers, there are great dangers to users in the remote control of the vibrator. The first is related to the connection between the Bluetooth LE of the vibrator and the smartphone application that could lead to eavesdropping, replay and MitM attacks. Although the equipment offers several pairing methods the most dangerous is “no pairing” as noted in the report. This method can allow hackers to search for information on the device as well as write data. If a hacker is in range, he could take control of the device. Also, a man in the middle attack is possible due to the lack of authentication, where a hacker can create a link for itself and then decrement or increment the ID to get direct access to the link used by the person. Due to the lack of authentication, a reflected cross-site scripting is also possible, but as noticed by the researchers it is not as dangerous as the other security issues.

Last but not least the researchers recommend a complete update in the software and mobile application used by the devices. It is highly recommended for all users to change their login information as well as their passwords for greater protection. Not all security flaws were addressed and corrected, therefore there are some dangers loaming around that can be exploited by tools like Shodan and autosploit. It is a social security concern these vulnerabilities since they pose a grave danger to user’s reputation, that can lead to suicide.

Sources:

http://www.securitynewspaper.com/2018/02/03/internet-dildos-long-way-vibrant-future-iot-iod/

https://www.sec-consult.com/en/blog/2018/02/internet-of-dildos-a-long-way-to-a-vibrant-future-from-iot-to-iod/index.html

https://www.sec-consult.com/en/blog/advisories/multiple-critical-vulnerabilities-in-whole-vibratissimo-smart-sex-toy-product-range/index.html

https://www.theregister.co.uk/2018/02/02/adult_fun_toy_security_fail/

http://www.zdnet.com/article/this-smart-vibrator-can-be-easily-hacked-and-remotely-controlled-by-anyone

https://mashable.com/2018/02/01/internet-of-dildos-hackers-teledildonics

https://www.cnet.com/news/beware-the-vibratissimo-smart-vibrator-is-vulnerable-to-hacks/

http://www.wired.co.uk/article/sex-toy-bluetooth-hacks-security-fix

https://www.forbes.com/sites/thomasbrewster/2018/02/01/vibratissimo-panty-buster-sex-toy-multiple-vulnerabilities/#37ec1d25a944