IBM Describes AI-powered Malware That Can Hide Inside Benign Applications

13.8.18 securityweek Virus

IBM Researchers Describe "DeepLocker" as a Stealthy, Evasive, Targeted Attack Methodology in a Class of Its Own

Cybersecurity is an arms-race game of leapfrog. Adversaries gain the upper hand until they are leapfrogged by a superior technology from the defenders; which lasts as long as it takes for the adversaries to develop a new technology or methodology, and a new defensive technology is required. We have reached the point where many cybersecurity vendors claim to have gained the upper hand against adversaries through the use of artificial intelligence (AI) and machine learning (ML) threat detection.

But deep down, everyone knows this game of leap frog will continue. Adversaries are expected -- and in some cases have started -- to use their own application of AI and ML to defeat that of the defenders. At the Black Hat conference on Thursday, IBM presented just one way that black hats could do just that: a new class of AI-enhanced malware attack it calls DeepLocker.

Dr. Marc Ph. Stoecklin, principal research scientist and manager, cognitive cybersecurity intelligence, IBM Research, described the methodology to SecurityWeek. This is the IBM team that started Watson within IBM. While the team's primary purpose is to develop new AI applications to enhance security and improve threat detection, "We also need to understand where attackers are going," said Stoecklin. "So, we spend quite a lot of time understanding the threat landscape, evolutions of technologies, and how attackers are benefitting from the technology shifts going on."

AI is perhaps the major current technology shift. "With the progression and democratization of AI," warned Stoecklin, "there is a new shift going on where attackers can very easily and very quickly weaponize existing AI tools that are open source, and build highly effective and capable attacks." DeepLocker is the result of research into what is already possible, using only freely available open-source AI technology. It is not required for adversaries to develop anything new, but merely to use current technology in a new manner.

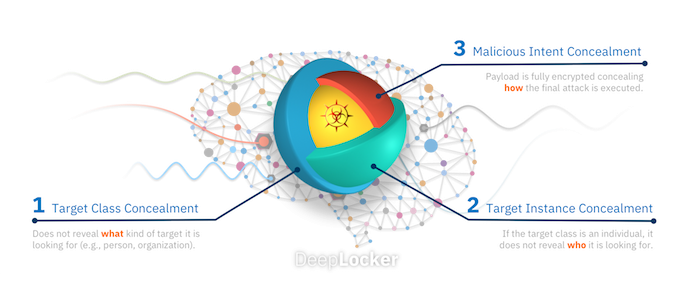

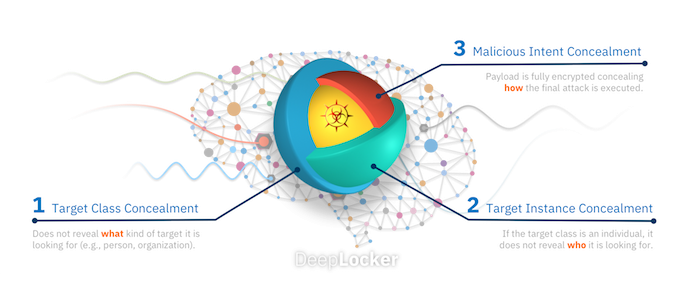

"DeepLocker," Stoecklin told SecurityWeek, "uses AI to hide any malicious payload invisibly within a benign, popular application -- for example, any popular web conferencing application. With DeepLocker we can embed a malicious payload and hide it within the videoconferencing application. Through the use of AI," he added, "the conditions to unlock the malicious behavior will be almost impossible to reverse engineer."

In short, DeepLocker is a methodology for hiding malware within a legitimate application in a manner that would prevent any researcher or threat hunter from knowing that it is there. But DeepLocker goes further. The key to unlocking and detonating the malware is the biometric recognition of a predefined target. This means that DeepLocker malware can be widely distributed to millions of users, but it will only ever activate against the precise target or targets.

"You can think of this capability as similar to a sniper attack in contrast to the 'spray and pray' approach of traditional malware," writes Stoecklin in an associated blog. "It is designed to be stealthy and fly under the radar, avoiding detection until the very last moment when a specific target has been recognized. What makes this AI-powered malware particularly dangerous is that, similar to how nation-state malware works, it could infect millions of systems without ever being detected, only unleashing its malicious payload to specified targets which the malware operator defines. But unlike nation-state malware, it is a concept that is feasible in the civilian and commercial realms."

The military 'sniper' allusion is telling. IBM would not be drawn on whether any nation-states are already using this particular technique; but it is certainly not impossible. Consider Stuxnet. It was a targeted attack against Iran, but it escaped and was ultimately reverse engineered and understood -- leading to considerable embarrassment to the U.S. government, and to a lesser degree Israel.

Had the Stuxnet payload been embedded in the DeepLocker methodology, it would (almost certainly) never have escaped and never been reverse-engineered. Attribution becomes almost impossible, and nation-states could deliver highly targeted attacks with a higher degree of impunity. Zero-day exploits could be employed with less certainty that defenders could reverse engineer and create defenses.

In the Black Hat presentation on Thursday, IBM used a Wannacry payload embedded within DeepLocker in a video conferencing application, triggered by facial recognition of the intended victim. This is a particularly pernicious example. Triggering a targeted wiper could first destroy the target's computer while removing all evidence of what had happened.

"Basically," explained Stoecklin, "we can train the AI to recognize a specific person, a specific victim or target -- and only when that person is sitting in front of a computer and can be recognized via the web cam, then a key can be derived that allows the software to unlock the malicious behavior."

The trigger can be anything -- facial recognition, behavioral biometrics, or the presence of a particular application on the system to help target a specific group or company. "Take yourself." IBM said. "As a journalist you do a lot of writing and will have your own stylometry. We could train the AI to recognize a concentration of your documents with your stylometry, and trigger on that basis. You add a couple of more -- geolocation, IP address -- and you only need a few details to uniquely recognize and identify anyone in the world."

This just leaves delivery. "Upstream," suggested IBM. Like CCleaner. CCleaner was infected by attackers and downloaded by millions of users. If the infection had been hidden in DeepLocker, only the intended target or targets would have been affected by the malware. Other upstream targets could include CMS add-ons known to be used by the target.

While the threat seems extreme, its success is not inevitable. The threat comes from the increasing use of AI-powered attacks that challenge traditional rule-based security tools. "We, as defenders," blogs Stoecklin, "also need to lean-in to the power of AI as we develop defenses against these new breeds of attack. A few areas that we should focus on immediately include the use of AI in detectors, going beyond rule-based security, reasoning and automation to enhance the effectiveness of security teams, and cyber deception to misdirect and deactivate AI-powered attacks."

At the same time, not everyone believes that DeepLocker will be undetectable. Ilia Kolochenko, CE at High-Tech Bridge, comments, “We are still pretty far from AI/ML hacking technologies that can outperform the brain of a criminal hacker. Of course, cybercriminals are already actively using machine learning and big data technologies to increase their overall effectiveness and efficiency. But," he said, "it will not invent any substantially new hacking techniques or something beyond a new vector of exploitation or attack as all of those can be reliably mitigated by the existing defense technologies. Moreover, many cybersecurity companies also start leveraging machine learning with a lot of success, hindering cybercrime. Therefore, I see absolutely no reason for panic today.”